System Architecture

Our System Architecture utilises numerous components of Mixed Reality Toolkit (MRTK) as well as external APIs that are responsible for extracting medical terms from speech as well as finding term definitions and diagrams.

Our application consists of three scenes, the medical dictionary which is responsible for extracting medical terminology using Natural Language Processing (NLP), the notes scene, which allows junior doctors to attach notes on walls so as remember dosologies or drugs easier; and finally the patient information scene which is responsible for displaying patient information such as brain scan or vital information from an external source.

All scenes have as their base the HoloLens which is our hardware of choice as explained in the Research page. We utilise Unity3D as a middleware to integrate the functionalities incorporated in the Mixed Reality Toolkit (MRTK) and the language we use is C#.

Medical Dictionary Architecture

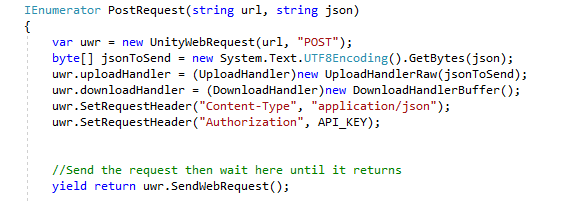

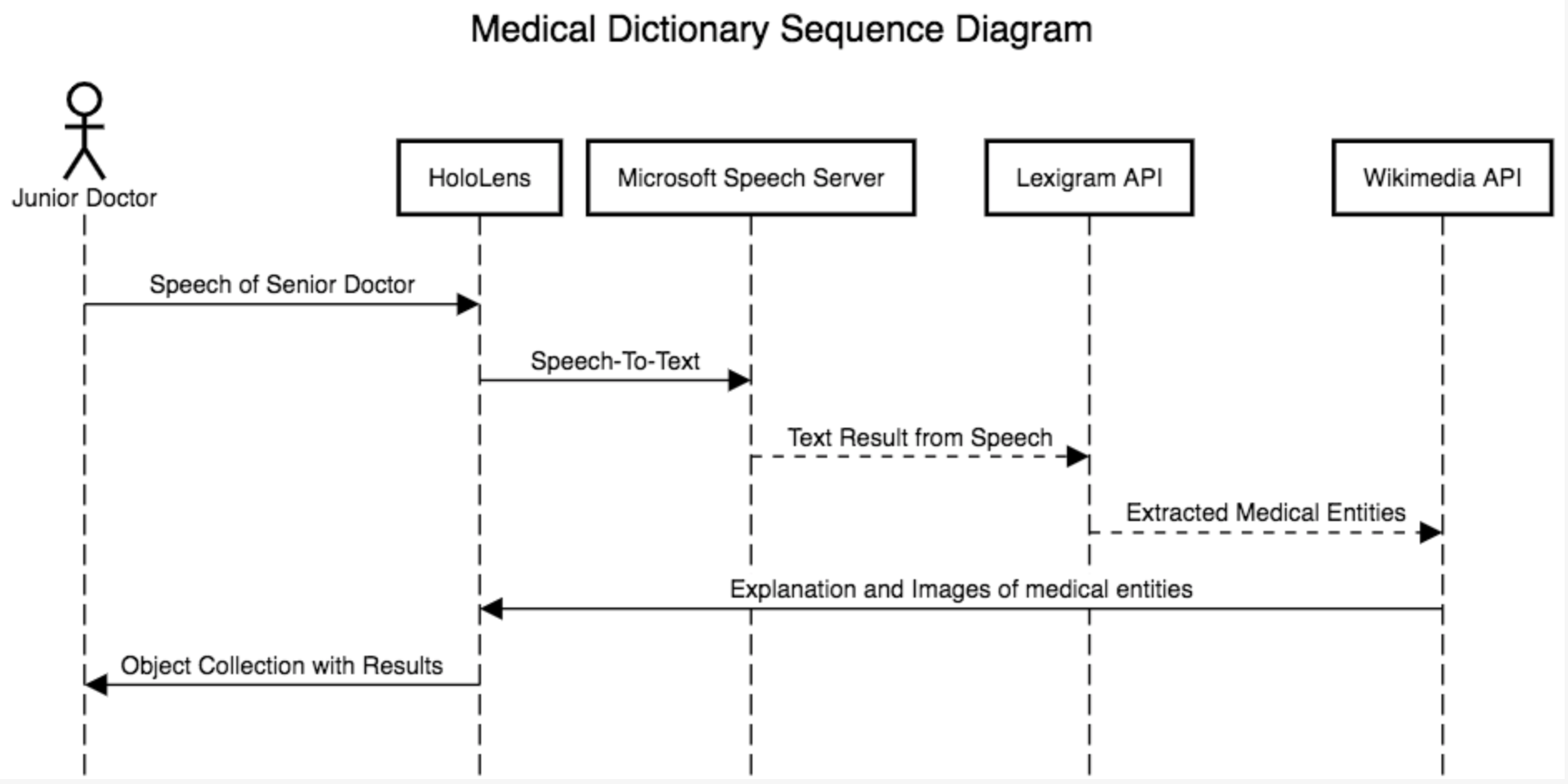

By enabling the recording the HoloLens uses a mixture of onboard calculations along with cloud-based algorithms by the Microsoft Speech library to convert the users speech to text. After the speech is converted to text our code calls the .dictationResult [1] command to get the final hypothesis of the text. This text is then passed on the Lexigram API [2] which is a very powerful medical extraction engine with an API endpoint to extract all the medical terms.

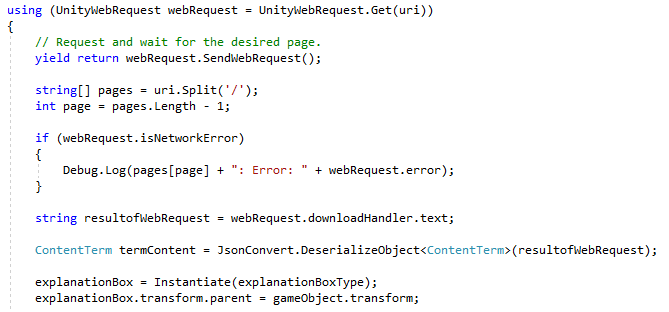

After that the extracted terms pass through the Wikimedia API [3] which finds the relevant terms on Wikipedia and extracts the top definition i.e. first paragraph which is usually the most relevant and has the most concise information.

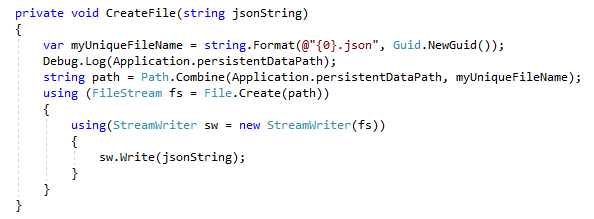

Once the term is found we perform another call to the Wikimedia API to get the relevant image and if found we download it as a texture onto the HoloLens [4] and Unity renders it. Once all the terms are found the user has the ability to export them in a JSON format which is one of the most popular formats as it is both easily read by humans and machines alike.

This has the potential to be exported onto a database or parsed to another headset such as Vuzix M300XL [5]. Finally the file is stored onto the HoloLens and can be easily downloaded from the HoloLens device portal.

Notes Scene Architecture

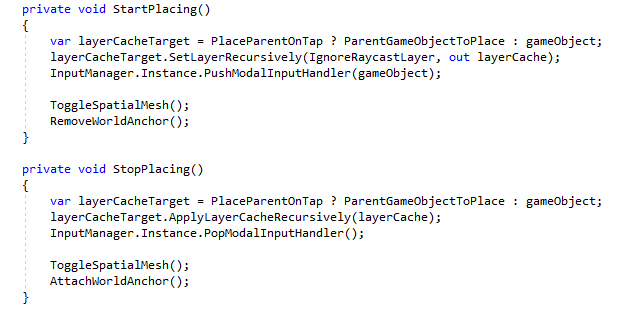

The notes scene utilises the most advanced feature of the HoloLens which is spatial mapping. The user generates a note, then by tapping the HoloLens scans the room and finds all the flat surfaces utilising its surface detection algorithm which is included as a standard inside the HoloToolkit. Then a derivative of the script tapToPlace.cs from the MRTK [6], which was heavily edited to add additional features is called and tries to place the note on a planar surface.

While placing an anchor is also added, which means that when the junior doctor closes the app and reopens it the note is at the same place, in the same room where it was left. To achieve this we use the Spatial Anchor Store which is where we store the anchors of each note. That store is a specific file inside the HoloLens which inaccesible, and abstracts writing directly the location of the anchors. After the note is placed the Speech-To-Text is utilised again so as for the doctor to write content onto the notes.

Patient Scene Architecture

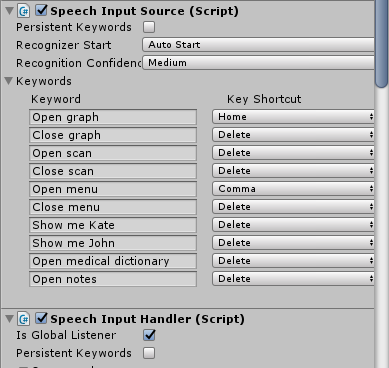

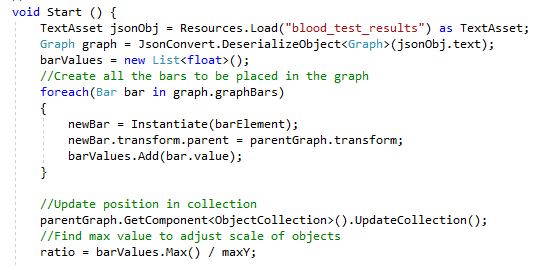

Our patient scene is heavily focused on the UI/UX part and displaying information in a beautiful way, hence our main focus was to use fake patient data, extracted from Synthetic Healths' Synthea [7] and display it in a beatiful way. We utilise the "phrase recogniser" [1] from the Voice-Input of MRTK to display content via voice commands if the junior doctors hands are not available.

We then load (currently) a brain scan and blood tests of two patients which come from two JSON formatted files. The functionality in our code exists, which is similar to the donwloadable texture menitoned above, so we could download the data from an external database, but this was beyond the scope of our project. We then animate the graph and allow the junior doctor to retrieve the information in an easy and non-invasive way.

Sequence Diagram

The reason we chose the three scenes is that we needed a way to enhance the learning experience of junior doctors, hence we first had in mind a rough idea of how we wanted our application to behave and help doctors.

The flow was that the junior doctor first goes to the Patient scene and learns any information needed about the patient, then the dictionary scene follows which will allow the doctor to follow through a ward walk with a senior doctor and learn any medical terminology and extract terms. Then after the ward walk is over, the junior doctor can go to the notes scene where any information learned or that must be remembered can be saved all around the hospital utilising spatial mapping.

We made a sequence diagram for our most important and complicated scene, which is the dictionary scene and all the calls can be seen below.

Architecture Design Patterns

When developing this application, our team was led by the idea that this project will lay the foundation for future work in the field of Mixed Reality for GOSH DRIVE. In order to ensure our tool will be up to standards, we composed a set of design patterns that our software must adhere to, as criteria for efficiency.

Extensibility

As Unity revolves around the concept of objects and the relations between them, our goal was to avoid any tight dependencies on objects that are likely to be changed in the future. Thus, in order to reduce coupling, we used public variables as much as possible to insert the needed objects for a certain functionality so that, as long as it conforms to the type of the initial object, a new one can take its place without the need of refactoring. Another useful method for identifying objects proved to be the usage of tags, which drastically reduced the need to search for objects regarding their relations since those are frequently changed. So, attaching the respective tag to new objects makes their usage effortless.

Prefabs are another means of simplifying a potential extended use of elements that are currently building blocks in our scenes. They are grouped depending on the scene they appear in and contain all the needed scripts for their activity. Thus, when doing future work, developers can reuse these elements by a simple drag and drop.

Clarity

Functionality is separated so that different components have high cohesion, meaning that scripts have one main purpose and tasks that are unrelated to the activity of a certain component are not delegated to it.

Removing Duplication

Although implementing animations on objects introduces dependencies, for example two elements might both use sliding but in different scenarios, we aimed to reduce duplication by generalizing and merging the different cases instead of repeating code.

Testability

To ensure that our application is working as expected and to avoid any potential fragility in the future, we implemented unit tests and used the Unity Test Runner, which uses an open source testing library for .Net languages. More information on this topic in the Testing section.