Legal Issues

Adapted from Legal Issues Report of Petros Xenofontos (Team Leader)

As discussed with the client and according to our UCL assigned project, the delivered application will be a Proof-Of-Concept (PoC) about the potential uses of the HoloLens by doctors when undergoing their training. Therefore, the delivered application will not use as strong encryption algorithms or security standards which comply with those of a medical environment. Furthermore, as discussed with the client, the application will use "fake" patient data (generated by Synthea [1]) and demonstrate the potential of the software, as if used in an actual medical environment, hence the security will be sufficient for such an application. As a result, the team cannot guarantee, nor is liable for any potential problems encountered due to deploying the application outside the demonstration environment. The team is liable to produce what the client (Great Ormond Street Hospital, DRIVE, NTTData) and the university (UCL) have agreed on, which includes the delivered application alongside a number of completed features as specified in the MoSCoW requirements shown both to the client and the university. Specifically, the team is liable to deliver the application with all the Must have features completed alongside a number of Should have features and potentially Could and Would have features.

Distinguishing between patents, trade secrets and copyrights is important in determining the IP type of the project. As we are not developing a "new invention" and "non-obvious" meaning a not obvious advancement of the existing technology, we are simply adding certain features to the HoloLens platform. Hence a patent is not suitable. A trade secret is also not suitable as the work should be Open-Source and in fact discoverable by others, so as to be able to improve it. Taking the above into consideration the project falls under the Copyright category. While the MixedRealityToolkit [2] is used as described below, much of the structure of the application is "original", as a result, according also to the 1992 case "Computer Associates International, Inc. v. Altais, Inc" the applications falls under the copyright law and the original parts of the application i.e. written by the team, can be copyrighted, in order to protect the work and effort put by the team.

The MediaWiki API [8] falls under the Creative Commons License (CC) and hence, we are allowed to use its content freely and copy and redistribute it as required. As a result, we are able to extract the terms along with their definitions, which come from the MediaWiki API and save them in a file without worrying about other legal implications such as plagiarism. Furthermore, we explicitly mention in all our documents that we are using the MediaWiki API, hence sufficient credit is given to the attributed authors/services.

At the current time of writing, one rather large open-source library used in the application is the MixedRealityToolkit, for Unity. It falls under the MIT license, which means its components can be used/modified as long as the license and copyright notice is included in the application files (which they are). This library is used to provide interaction with the HoloLens as well as front-end graphics. Another external library used (not included in Unity standard libraries) is "JSON .NET For Unity" [3] which is used for parsing the responses of the medical extraction API (discussed later), this falls under Unity's "Asset Store Terms of Service" [4] which includes allowing the asset to be used for personal and commercial purposes but not redistributing it on its own. We are using core Unity libraries for the majority of operations in our application, as per the license agreement we are eligible to "sell” and “distribute” a commercial product using Unity with the restriction that the team did not make more than $100,000 of profit during the last fiscal year (which is true). However, if the application is to be sold by a company that makes more than the above amount then this might change and Unity Pro [5] or Unity Enterprise might have to be used to develop the application further. Finally, the Lexigram API [6] is used which provides the main back-end for the medical extraction. While not Open-Source, the "Terms of Service" (ToS) of the API, indicate that it stores only the data absolutely necessary to produce the required output. In addition, as the free-version of the software is used, the provider does not have a liability towards us to keep the service online at all times, but this can change if the license is bought which will create a contract with the provider of the API as stated in the ToS. As discussed with our client, the medical extraction from speech is secure, as even if an eavesdropper exists, in a medical context, no names are mentioned and also no photographs are taken, and the application does not "tag" the sending data in any way. In addition the Lexigram API is HIPAA [7] compliant meaning, it complies with (US) medical data storage/processing regulations.

To ensure GDPR compliance, as we are using speech-to-text to extract medical entities the application will ensure that the connection from the HoloLens to the API is encrypted and the identity of the doctor wearing it as well as the doctor speaking will not be revealed. Furthermore, an indication will be shown to the user to indicate the HoloLens is actively recording voice and the user will be asked if our application is allowed to use the device microphone. This will ensure that we have the consent of the user as well to make the user aware of any recording. In the future any real patient data used will comply with the NHS standards of processing patient data such as making sure the data is anonymous and encrypted when transferred. Also, digital signatures will be used to ensure authenticity of client and server so as to avoid any potential cyber-attacks. However, as discussed with our client, we are only using fake patient data for now therefore the GDPR regulations only hold for the speech-to-text part. Finally, if doctor data must be used, we will ensure they are kept to a minimum and not store them but use them for the current session only.

User Manual

Upon opening the application, the main menu pops up infront of the user. There are three options corresponding to each scene, from left to right, patient scene, medical dictionary and notes scene.

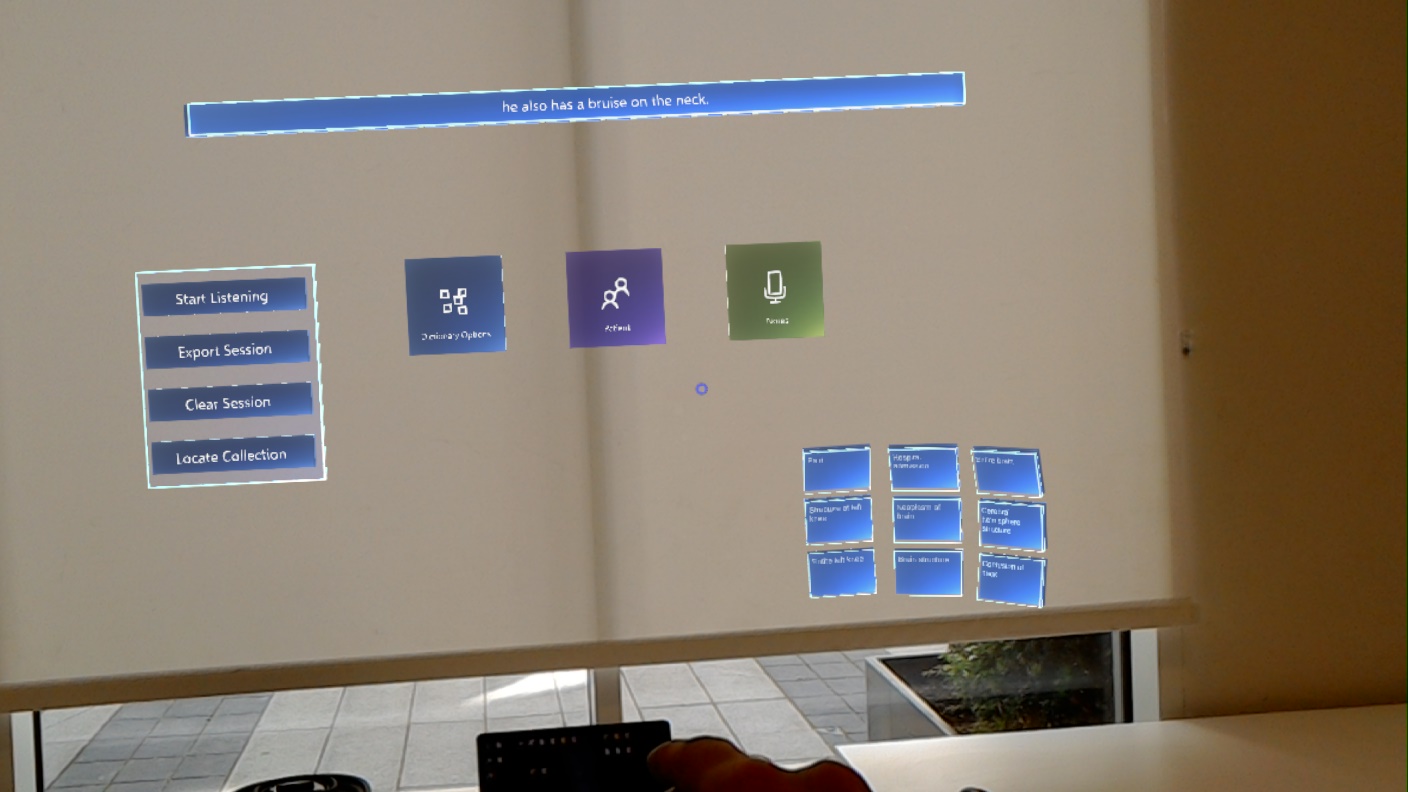

Every Scene has a menu which upon gazing onto the corresponding menu button of the scene you are in an instruction menu pops up which gives the available voice-commands and functionality of the current scene. Below is an example of the dictionary scene.

For the dictionary scene the user can tap the "dictionary options" button to get a pop-up menu, then press "Star Listening" to record either his own voice or a senior doctors. The definitions of the extracted terms can be located by pressing the "locate collection" button from the pop-up menu or saying "find collection". By doing so, an arrow pops up and guides you towards the object collection.

Upon gazing onto the term its definiton pops-up and on tapping its corresponding image pops-up next to it.

In addition the user can from the pop-up menu press export collection and a JSON file is created in the HoloLens which is accessible via the Device Portal of the HoloLens. The file format is JSON which is machine readable as well as human readable for further study.

The user can then select from the menu of each scene another scene to transition, the transition happens instantaneously and another menu pops-up following the same principle as above of having the information of each scene on the corresponding scene button. In the notes scene an additional button is added, which is one with a pencil and signifies the creation of a new note. The user can either create a note by pressing that or saying "Create Note".

By tapping on a note the user can place the note on any surface. The surface is indicated with a white mesh and the note lies on to it.

For the user to record something on the note, he can either say "Start recording" or press the microphone icon.

For the patient scene the user can dictate which patient information he wants to see by saying "Show me Kate" or "Show me John" after that the user can say "Open Menu" or press the panel. Information is provided on the panel as well. Furthermore, the user can then select the brainscan or the graph to via it or by voice commands of "Show graph" or "Show scan". The user can interact with the brain scan by gazing it and dragging left/right/forward/backwards to change the slicing.

Deployment Manual

Upon receiving the source code deployment is fairly straight forward. First the user must install the relevant tools as instructed by the Microsoft Mixed Reality Academy, which are the ones essentially responsible for building the application. The tools can be found on Microsoft Mixed Reality Academy and are the following:

- Windows 10 Ultimate or Enterprise as Hyper-V must be supported.

- Visual Studio 2017

- Windows 10 SDK 10.0.18362.0

- HoloLens First Generation Emulator (for running if no device is available)

- Unity 2018.3 LTS Version (Long Term Support)

- HoloToolkit from htk_release branch

On downloading the following and installing, you then go to our project, open project/Assets/Scenes/dictionary and then press "Build Settings" under "file" tab of Unity. Then as the Holotoolkit will already be installed in the scene, choose "Holotoolkit" tab and press "Apply mixed reality project settings". After that open "Build Settings" again, and press the "Add scenes button", the scenes folder opens from within our application, you select "Patient", "Notes", "home" and "base_scene" and then click build.

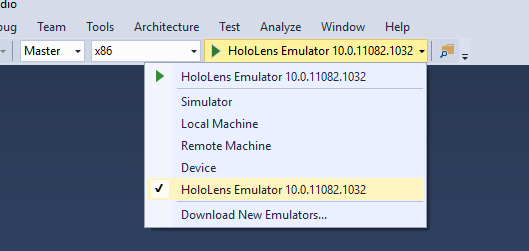

A dialog will pop-up asking for a folder, any folder shoul be fine but it has to be empty. Unity will show a progress bar and after completing the folder should open. Press the .sln file and Visual Studio 2017 should open, on the top next to the green play icon, press the down button and select "Device".

Connect your HoloLens device, select "Release" instead of "Master" from the drop-down menu and the deployment should start. Visual Studio Should say "Deployment Succeded" and on the HoloLens "Made with Unity" should appear and then after a short period the main-menu with the animation will show.

Porting to HoloLens 2

Our application has the potential to be ported to HoloLens 2 by following the following guide here

Basically there are four steps required and are the following:

Building our application for the HoloLens 2 was not in our requirements as we were only supplied with HoloLens 1 and HoloLens 2 came out on February 24 (2019). The documentation for porting was released at the last week of April which was too late for us to port the application and do the relevant Quality Assurance testing and ensure we deliver a robust and fully-working application. Nevertheles, future adopters of our project can do so with ease as almost all of our components utilise prefabs/components from the Holotoolkit which is what is used in the porting guide.

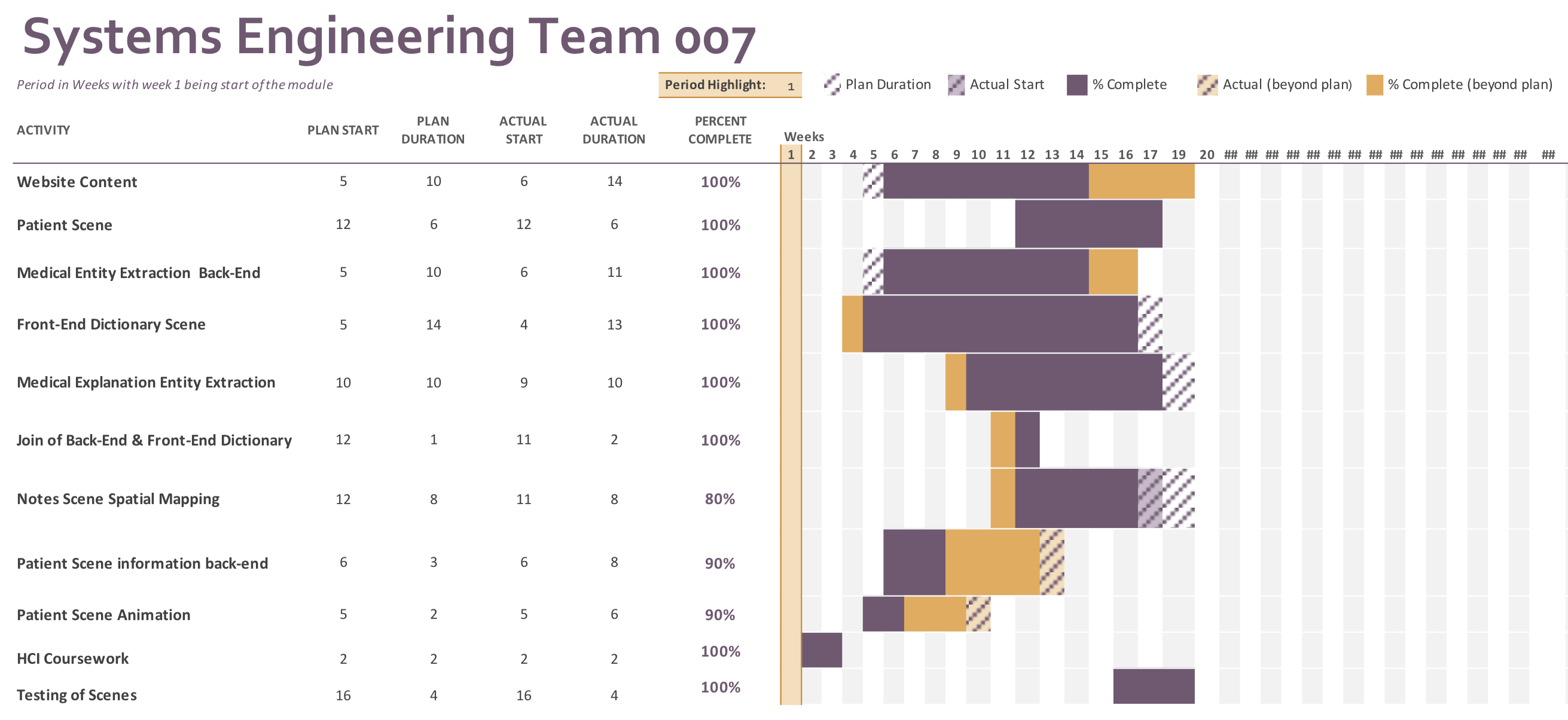

Gantt Chart

For the purposes of organising the workload we created a Gantt Chart, as can be seen below we underestimated many of our objectives such as the Medical Entity Extraction and the Front-End of the dictionary scene. These where crucial components and hence were iterated quickly, utilising a development cycle similar to that of AGILE development practices. Hence, due to the continuous delivery nature we kept iterating and improving these scenes even after our projected deadline on the Gantt Chart. Whether this is good or bad is debatable as, if we stopped the development, we could possibly focus on something else, perhaps more important, but we wanted our application to be of the highest possible quality and as error free and bug free as possible, hence iterating and improving scenes was top priority for the team.